The hidden security risks of the new AI powered browsers trend

It is undeniable. After the explosive rise of AI in general and more specifically, Generative AI in the past few years, it is now more or less mainstream. But as the world is focusing on claims like the race to AGI (Artificial General Intelligence), another use of AI is penetrating our daily lives: and it is not risk free! In this article we delve into the world of AI powered and Agentic browsers that seem to pop up everywhere and look into the risks this might pose for your organization. A lot has been written about data leakage by uploading it to AI platforms. But there are more risks than just that!

The emerging risk: agentic browsers & AI assistants

The rise of "agentic" browsers and AI assistant features—capable of navigating authenticated sessions, reading content, taking actions on behalf of users—marks a shift in how organisations must think about cybersecurity. A recent disclosure by Brave Software (disclaimer: they also are active in the AI browser domain), titled "Unseeable prompt injections in screenshots: more vulnerabilities in Comet and other AI browsers", reveals that hidden or embedded instructions in webpage-content or images can be picked up by these agents and executed as commands.

Previously, Brave reported on indirect prompt injection vulnerabilities in the Perplexity Comet Browser—where the agent confused user intent with untrusted webpage content.

These risks aren't niche. Independent academic work like recently published on arXiv shows that more than 40 % of tested agents may be induced via prompt injections to reveal sensitive data or perform unintended actions. Security-press articles like Malwarebytes' article by Pieter Arntz likewise warn that AI/agentic browsers "could leave users penniless" if they treat hacked instructions as legitimate commands.

From a CISO's viewpoint, what changes is this: the trusted browser paradigm (a human in the loop, visible UI cues, origin isolation) is replaced by an agent that executes on behalf of the user—and that agent may misinterpret or follow malicious instructions embedded in content. Traditional controls like Same-Origin Policy or CORS may not apply when the agent itself is acting with user privileges. Tom's Hardware

Implications for enterprise use

When your organisation deploys a productivity assistant (such as Microsoft 365 Copilot or any AI browser with agentic features), several risk vectors emerge:

- Data exfiltration and lateral movement: If the agent has access to internal systems or authenticated web sessions, an injection could cause it to navigate, extract or act on privileged data.

- Privilege escalation via automation: A hidden instruction could cause the agent to trigger workflows, make changes in identity/CMDB or create new user access tokens.

- Phishing and social engineering at scale: Because the agent trusts page content in its prompt context, attackers can craft benign-looking content (even images or screenshots) with hidden instructions. Brave described a method using nearly invisible text in a screenshot.

- Governance and compliance exposure: With generative AI features, mislabeled content, insufficient monitoring or permissions creep can lead to regulatory issues. For example, Microsoft's documentation indicates that while architecture has strong controls, "organisations still bear responsibility for mis-configured access and risks like social-engineering attacks."

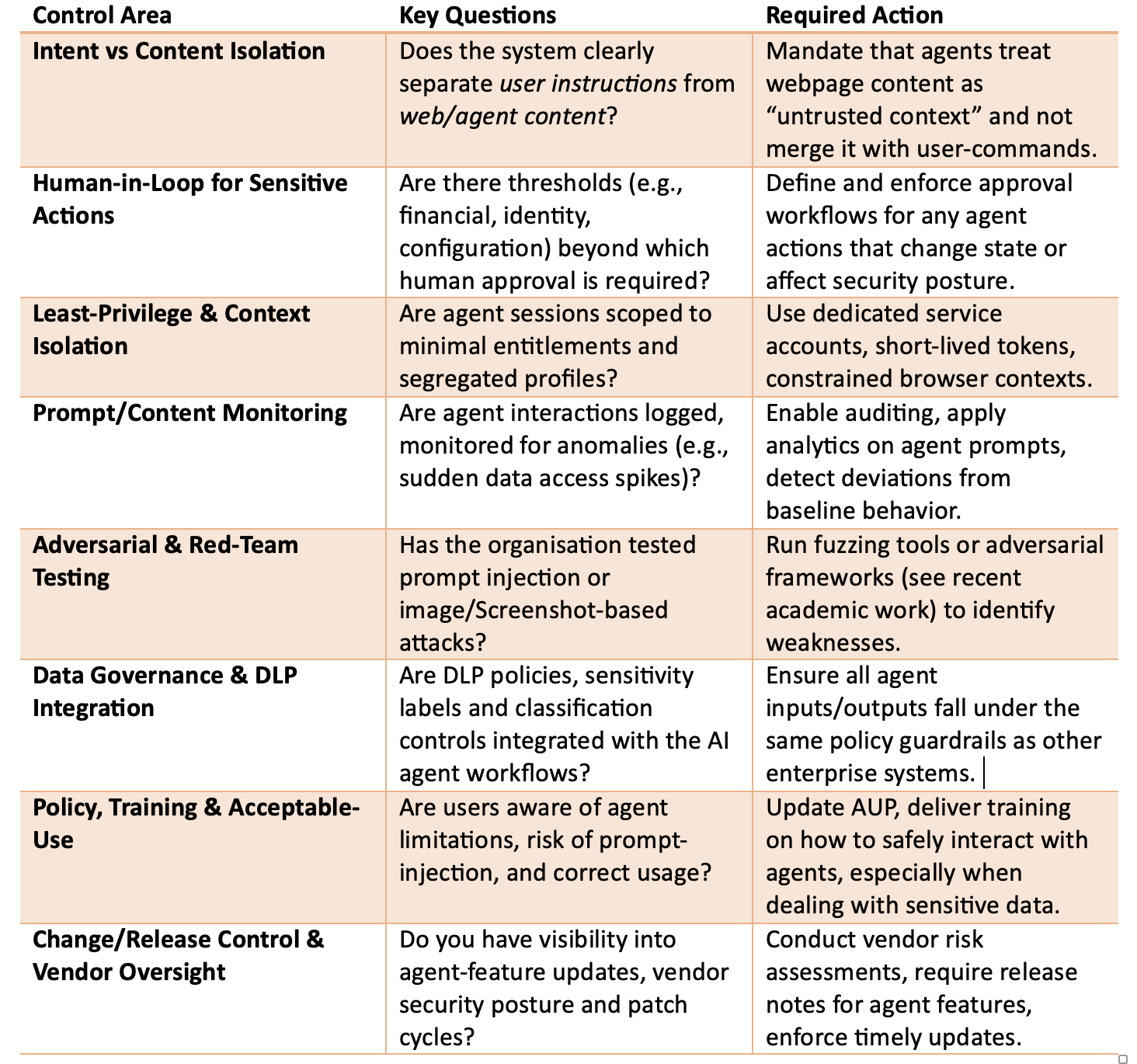

What you should do: strategic guardrails

As CISO, you don't need to be the product expert—but you do need to hold the business accountable for safe deployment. Below is a practical checklist you can present to your leadership team and technical stakeholders.

CISO-Level Practical Checklist

Also bear in mind: technology vendors are already aware and shipping controls. For example, Microsoft outlines its architecture for Copilot that includes prompt injection filters, tenant isolation, encryption, entitlement scoping and DLP integration. However, the vendor controls do not replace your responsibility. You must still configure, monitor and enforce governance. Ensure you have the skills on board and that this is prioritized.

Agentic browsers and AI assistants represent a significant productivity leap — but from a CISO standpoint, they represent a stretched trust boundary. What used to be a user with a browser is now an autonomous agent acting on behalf of the user, potentially across authenticated sessions and corporate systems. If compromised via prompt injection, those agents could operate as adversary entry-points.The role of a CISO is to ensure that the business captures the value of these capabilities without inheriting avoidable risks. Enforce separation of user intent vs untrusted content, demand human oversight of high-impact actions, apply least-privilege models, monitor behavior, conduct adversarial testing, and integrate AI workflows into existing governance and DLP frameworks. With those guardrails in place, your organisation can innovate with AI and agentic tools safely — rather than reactively after a breach.